HealthManagement, Volume 24 - Issue 1, 2024

MedTech sector is in the midst of a digital revolution, with AI leading the way. In the tightly regulated realm of MedTech, there's an increasing demand for a well-established regulatory framework that emphasises the equilibrium between innovation and patient safety. Let’s explore the current applications of AI in the MedTech sector, its key areas of utilisation, the challenges encountered in AI integration, and gain insights into the ongoing evolution of the regulatory framework.

Key Points

- Machine learning intersects with medical device development, driving innovation, treatment personalisation, and overall patient care.

- Adequately addressing the hurdles and complexities associated with integrating machine learning into medical devices translates to tangible benefits for patients, from enhanced diagnostics to personalised treatments.

- Regulatory frameworks are essential for adapting to the challenges and opportunities posed by machine learning in medical devices, ensuring safety and performance.

Introduction

The rapid evolution of information technology over the past 50 years is transforming our healthcare institutions from paper-based organisations into smart hospitals, a term outlined by European Union Agency for Cybersecurity (ENISA) where integration of technology enhances healthcare services, efficiency, and patient outcomes while addressing cybersecurity challenges. Machine learning (ML), a subset of Artificial Intelligence (AI) plays a crucial role in realising the vision of a smart hospital by leveraging data-driven insights for improved diagnostics, treatment personalisation, and operational efficiency. Systematic reliance on medical devices by both patients and healthcare providers accelerate technological advancements which have played a pivotal role in enhancing patient outcomes, improving diagnostics, and revolutionising treatment methodologies. Enabling healthcare professionals to make faster and more accurate decisions and/or automate monitoring processes have been among the main improvement objectives for many stakeholders. In the last years, machine learning has been one of the most transformative technologies within this sector. ML has emerged as a game-changer, particularly within the realm of medical devices where the development of algorithms enables computers to learn from data and make predictions or decisions without explicit programming. In healthcare, the utilisation of machine learning has been a paradigm shift, allowing for the analysis of vast amounts of medical data to derive meaningful insights. From predictive analytics to image recognition, machine learning has demonstrated its prowess in augmenting clinical decision-making processes, thus fostering a new era in healthcare.

The development of ML based system offers tremendous opportunity to improve patient care, diagnostic reliability and address healthcare professionals’ shortage. Adequately trained ML algorithms can accurately identify, and classify abnormalities based on radiological scans, sonograms, video streams or any form of image or sound. This has led to enhanced diagnostic accuracy and efficiency, particularly in areas such as mammography, radiology, cardiology, pathology, oncology and neuroimaging. In radiology, AI algorithms have been developed to help in the detection and classification of different conditions such as lung nodules, breast cancer and brain abnormalities. In pathology, AI has been shown to improve the accuracy and efficiency of disease diagnosis through the automatic analysis of histopathological samples (Strohm et al. 2020). In cardiology, AI-powered tools analyse electrocardiograms (ECGs) to swiftly identify patterns indicative of cardiac issues. In oncology, AI aids in the interpretation of complex genetic data to personalise cancer treatment plans. The Molecular Oncology journal (2020) showcased how AI algorithms can predict patient responses to different cancer therapies by analysing genetic and clinical data.

In pneumology, sounds recognition through AI algorithm are applied in order to detect and classify respiratory disease which increase the capacity and automate stethoscope.

At the time of writing this article, the internet site “AI for Radiology” lists over 60 ML base systems which have been granted CE Mark according to the Medical Device Regulation. On the contrary, the FDA curates a registry of medical devices incorporating AI/ML technology that are authorised for sale in the United States. Upon scrutinising this registry, it becomes evident that the initial FDA approval for an AI/ML-enabled device occurred in 1995. As of July 30, 2023, approximately 692 devices have received clearance or approval from the FDA.

Challenges with AI in Healthcare

The integration of artificial intelligence (AI) in healthcare, particularly in the realm of medical devices, presents both promising advancements and notable challenges. While AI holds the potential to revolutionise diagnostics, treatment, and patient care, several hurdles must be addressed to ensure its effective and ethical implementation.

One major challenge is the need for robust regulatory frameworks to govern the development and deployment of AI-powered medical devices. Ensuring the safety, efficacy, and data privacy of these devices is crucial to prevent potential harm to patients and maintain public trust. Striking a balance between innovation and regulation is essential to foster the responsible use of AI in healthcare.

Another central challenge lies in the quality and representativeness of data sets used for training and validating AI algorithms. Comprehensive and diverse data sets are essential to ensure the accuracy and reliability of medical devices. Inadequate or biased data can compromise the performance of AI models, leading to inaccurate diagnoses and treatment recommendations. For e.g., let us consider an AI algorithm trained on a dataset that lacks diversity in terms of demographic representation, featuring primarily tumours from a specific subset of the population. This limitation poses a risk to the model's ability to generalise effectively across diverse patient profiles. For instance, the algorithm may perform well within the constraints of the training data but could exhibit inaccuracies or biases when presented with cases from a more varied patient population. Therefore, the development and use of robust data sets that encompass a wide range of demographics and medical scenarios are critical to the success of AI in healthcare.

The issue of bias in AI poses another substantial hurdle. Biases present in training data or algorithms can perpetuate disparities in healthcare outcomes. Mitigating bias requires a meticulous examination of data collection processes and ongoing monitoring and adjustment of algorithms to ensure fairness and equity in medical decision-making thereby ensuring adequate performance. The healthcare industry must prioritise efforts to address and eliminate biases in AI systems to promote safe and effective healthcare practices.

Trustworthiness is a critical factor in fostering the adoption of AI in healthcare. Establishing trust among healthcare professionals and patients is challenging, particularly when dealing with complex AI algorithms. Transparency in AI decision-making processes, clear communication of the limitations and capabilities of medical devices, and adherence to rigorous ethical and scientific/ technical standards are essential to build and maintain trust in AI technologies and to safeguard their safety and performance. Ensuring that AI systems are explainable and interpretable by healthcare practitioners further contributes to their acceptance and integration into clinical practice.

Interoperability issues pose another significant challenge. Integrating AI technologies into existing healthcare systems and ensuring seamless communication between different devices and platforms is complex. Standardisation efforts are necessary to facilitate interoperability and enable the efficient exchange of information, promoting a cohesive and integrated healthcare ecosystem.

Healthcare professionals also face challenges related to the integration of AI into their workflow. Adequate training and education are crucial to empower clinicians to effectively use AI tools and interpret their outputs. Additionally, fostering collaboration between technologists and healthcare practitioners is essential to develop solutions that align with real-world clinical needs.

In conclusion, while AI in healthcare, particularly in the context of medical devices, holds immense potential for improving patient outcomes, the challenging nature of ensuring the safety and performance of AI systems arises from a combination of ethical, educational, regulatory, and technological factors like ensuring privacy and security, monitoring of updates, quality of data and bias etc., Addressing these challenges requires a multidisciplinary approach involving collaboration between technologists, healthcare professionals, regulators, and policymakers.

Regulatory Landscape: Medical Devices and Artificial Intelligence

European Union (EU)

EU Medical Device Landscape

The medical device regulatory landscape in the European Union (EU) is governed by two key regulations: the Medical Device Regulation (MDR; EU 2017/745) and the In Vitro Diagnostic Regulation (IVDR; EU 2017/746). These regulations, implemented to enhance patient safety and ensure the effectiveness of medical devices, have replaced previous directives (MDD 93/42/EEC; AIMDD 90/385/EEC; IVDD 98/79/EEC).

While the Medical Device Regulation (MDR) focuses on various medical devices, including implants, diagnostic equipment, and software, In-Vitro Diagnostic Regulation (IVDR) specifically addresses in vitro diagnostic medical devices, such as laboratory tests, diagnostic reagents and IVD software.

Both regulations introduce stricter requirements for pre-market, post-market surveillance, and quality management systems. Notably, they place a greater emphasis on transparency, traceability, and market surveillance emphasising the importance of ensuring the safety and efficacy of medical devices in the EU market. Manufacturers must adhere to specific conformity assessment procedures, demonstrate compliance with general safety and performance requirements, and actively engage in post-market surveillance. The EU’s regulatory framework for medical devices aims to align with technological advancements while maintaining a strong focus on patient safety and public health.

EU Artificial Intelligence Act (AIA)

The EU AI Act is a comprehensive legislative framework proposed by the European Union to regulate the development and use of AI technologies within its member states. First draft was introduced in April 2021 with an intention to address ethical and legal challenges associated with AI, the Act aims to establish a harmonised framework for the development, deployment, and use of AI technologies across diverse sectors (horizontal legislation). As of December 9, 2023, the Members of European Parliament (MEPs) reached a political deal with the council and the final agreed text is currently awaiting adaptation by both Parliament and Council to become EU law.

Key provisions include establishing risk-based categorisation for AI systems, outlining requirements for high-risk applications, promoting transparency and human oversight, and establishing a European Artificial Intelligence Board to oversee and enforce compliance.

The Act categorises AI applications into four risk levels—unacceptable risk, high risk, limited risk, and minimal risk—and introduces specific requirements and obligations based on these risk levels. For high-risk AI systems, the Act mandates conformity assessments, data and record-keeping obligations, transparency requirements, and human oversight. Furthermore, medical devices using AI and requiring the involvement of a notified body for conformity assessment under the respective EU regulations are categorised as high-risk AI systems, thus mandating manufacturers of AI systems to comply with additional requirements set out in the Act.

Although the EU AI Act seeks to balance innovation with ethical considerations, it poses challenges for medical device manufacturers. Key challenges include increased scrutiny on safety and transparency requirements, considerations for data privacy, effective allocation of resources, and adapting to evolving regulations. Striking a balance between compliance and innovation is crucial for manufacturers to navigate this intricate regulatory landscape successfully.

United States of America

U.S. Medical Device Landscape

Medical device regulations in the U.S. fall under the authority of U.S. Food and Drug Administration (FDA), an agency within the Department of Health and Human Services (HHS). FDA regulates food, drugs, biologics, cosmetics, veterinary medicine, and tobacco, while Center for Devices and Radiological Health (CDRH) is primarily responsible for medical device regulation, with assistance from the Center for Biologics Evaluation and Research (CBER).

As identified by Medical Device Amendments (MDA) of 1976, medical devices are classified and regulated in 3 classes based on risk posed to consumer. Each regulatory classification comprises different regulatory controls and become stringent with increasing risk class i.e., class III devices are most highly regulated due to the risk involved and require premarket approval from FDA while class I devices can be listed with FDA by the manufacturer. Manufacturers upon classifying the risk class of their device shall determine the appropriate regulatory pathway. High-risk devices (Class III) typically undergo the premarket approval (PMA) process, involving comprehensive scientific evidence to ensure safety and efficacy. Moderate-risk devices (Class II) often qualify for the 510(k) pathway (PMN; Pre-Market Notification), requiring substantial equivalence to a predicate device. Low-risk devices (Class I) are usually exempt from PMN and/or other quality systems requirements and can be self-listed.

Next to the conventional pathways, there exists a De Novo pathway. De Novo process is a regulatory pathway for novel medical devices with no clear predicate. Manufacturers submit evidence to establish the device's safety and effectiveness, leading to a new classification. It provides a route for innovative technologies with moderate risk, facilitating market entry for devices that don't fit the criteria for the 510(k) pathway. Lastly, expedited options like the Breakthrough Devices Program and the Accelerated Approval Program accelerate access to innovative technologies, streamlining regulatory processes for devices addressing critical unmet medical needs.

Medical device manufacturers are subjected to a range of regulatory controls i.e., requirements to ensure that devices are not adulterated or misbranded and to otherwise assure their safety and effectiveness for their intended use. These requirements include, for example, premarket review, labelling, establishment registration and device listing, and quality system regulation (good manufacturing practices for devices).

One of the biggest challenges in regulating AI/ML enabled medical devices is the rapidly evolving nature of the technology that requires constant adaptation to keep pace with emerging advancements and uphold standards of safety and effectiveness. Another challenge is the lack of clear guidelines and standards for AI/ML in medical devices, causing confusion for manufacturers regarding the required regulatory measures and hindering regulators' ability to assess the safety and effectiveness of such devices.

Artificial Intelligence

There isn't a comprehensive standalone regulation specifically for artificial intelligence (AI) in the United States. However, various existing laws, regulations, and agencies address certain aspects of AI applications, depending on the context and industry. Following points must be considered:

- Sector-Specific Regulations: Certain sectors, such as healthcare, have specific regulations that indirectly apply to AI systems. For example, the safety and performance of AI in medical devices will be evaluated by the FDA during its clearance/ approval process.

- Consumer Protection and Privacy Laws: Regulations like the Federal Trade Commission (FTC) Act and state-specific data protection laws govern consumer protection and privacy. They may be applicable when AI systems involve personal data.

- Ethical and Fairness Considerations: While not regulatory in nature, there is a growing emphasis on ethical AI practices. Various organisations, including the National Institute of Standards and Technology (NIST), are developing guidelines and principles to promote ethical AI development.

- National AI Strategy: The U.S. government has shown interest in AI development through initiatives like the National Artificial Intelligence Initiative Act of 2020, which focuses on advancing AI research and development.

- Congressional and Agency Inquiries: There have been discussions and inquiries within the U.S. Congress and federal agencies about the need for AI regulation. Proposals and discussions vary, including considerations for transparency, accountability, and bias mitigation in AI systems.

It's essential to stay updated on developments, as the landscape of AI regulation is dynamic, and new initiatives may emerge. As of now, there isn't a singular, comprehensive federal law dedicated solely to regulating AI in the United States.

State of the Art Considerations

The field of AI is advancing at an unprecedented pace, with continual breakthroughs in machine learning algorithms, computational power, and data analytics. In this dynamic landscape, to integrate artificial intelligence into medical devices and to harness the full potential of AI in medical devices, it is crucial to prioritise "state-of-the-art considerations." This approach not only reflects ongoing advancements in technology but also addresses critical factors that contribute to the effectiveness, safety, and ethical deployment of AI in healthcare.

Use of Regulatory Sandboxes

The term ‘regulatory sandbox’ can be traced back to the financial technology, where regulatory sandboxes have existed since 2014. The UK Financial Conduct Authority (UK FCA) has been a leader in this concept, establishing its regulatory sandbox in 2014, and since then it has been replicated in about 40 jurisdictions. The term can be generally defined as a testbed for a selected number of projects where certain laws or regulations are set aside, and the project receives guidance and monitoring from a competent authority.

According to a World Bank study, more than 50 countries are currently experimenting with fintech sandboxes. Japan introduced in 2018 a sandbox regime open to organisations and companies both in- and outside Japan willing to experiment with new technologies, including blockchain, AI, and the internet of things (IoT), in fields such as financial services, healthcare and transportation. In Europe, both Norway and the United Kingdom (UK) have developed AI sandboxes. Norway established a regulatory sandbox as part of its national AI strategy, to provide guidance on personal data protection for private and public companies.

In scope of a sector specific legislation, EU’s AI Act introduces the concept of ‘AI regulatory sandboxes’, with an objective to foster AI innovation by establishing a controlled experimentation and testing environment for innovative AI technologies, products, and services during development phase, before their placement on the market. To this end, Spain has announced that they will be piloting a regulatory sandbox aimed at testing the requirements of the legislation (EU AIA), as well as how conformity assessments and post-market activities may be overseen. Deliverables from this pilot include documentation of obligations and how they can be implemented, and methods for controlling and following-up that can be applied by those supervising national authorities responsible for implementing the regulation. Reflecting the cross-state approach desired by the Act, other member states will be able to follow or join the pilot. As accessing such AI regulatory sandboxes typically involves collaboration with regulatory agencies or member States that offer such environments, it is suggested that manufacturers of AI systems closely monitor and stay connected to the respective regulatory agencies in their member state.

Finally, usage of regulatory sandboxes not only supports manufacturers of AI based systems, develop their products in a regulation compliant way, but also support them in avoiding potential legal risks and help them to better understand the regulatory and/or statutory expectations. Furthermore, testing in a controlled environment also mitigates the risks and unintended consequences (such as unseen security flaws) when bringing a new technology to market, and can potentially reduce the time-to-market cycle for new products.

Guidance on AI/ML Practices

The U.S. FDA (Food and Drug Administration), Health Canada and UK’s MHRA (Medicines and Healthcare products Regulatory Agency) jointly identified 10 guiding principles for the development of Good Machine Learning Practices. These guiding principles will help promote safe, effective and high-quality medical devices that use AI/ML.

FDA in its discussion paper ‘Proposed Regulatory Frameworks for Modifications to Artificial Intelligence/ Machine Learning (AI/ML) – Based Software as a Medical Device (SaMD)’, presented their thoughts for reviewing changes performed to SaMDs involving AI/ML technology and also requested the feedback from the industry [15]. Similarly, guidance from other regulators and/or industry trade associations on medical devices incorporating AI/ML technologies must be taken into consideration wherever possible. Complying with guidance proposed by regulatory not only supports for regulatory compliance but helps in better understanding the expectations of the regulators and can benefit in reducing the regulatory review times needed for product’s market entry.

International Standards

International standards are globally recognised guidelines and specifications developed to ensure the consistency, safety, and quality of products, services, and systems across borders. They provide a common language and framework for organisations to meet specific requirements, fostering interoperability and facilitating international trade.

ISO, or the International Organization for Standardization, is a non-governmental international body that develops and publishes these standards. Comprising representatives from various national standards organisations, ISO sets standards in diverse areas, including technology, healthcare, manufacturing, and more. ISO standards contribute to innovation, efficiency, and the assurance of quality on a global scale.

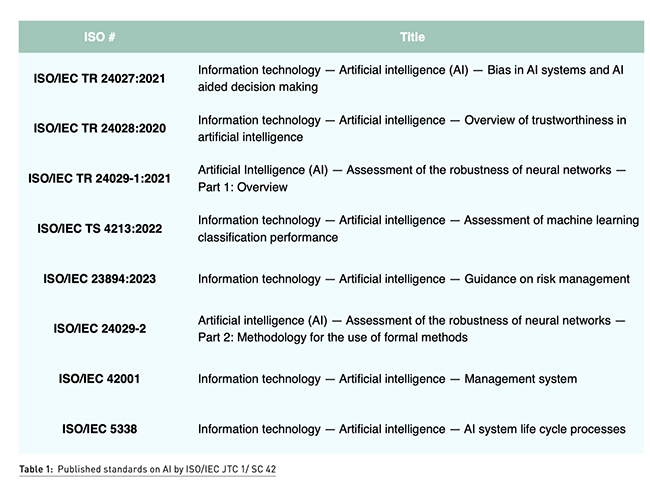

At the time of writing this document, there are a few standards published by the standardisations committees that can be used by the manufacturer of AI systems to demonstrate the robustness and performance of their AI system. Out of 24 published standards and 32 standards under development by ISO/IEC JTC1/SC 42 Artificial Intelligence, Table 1 identifies some key standards that must be considered by manufacturers of AI enabled medical devices.

Proposed Method for Regulatory Compliance for an AI-Based Medical Device

Achieving regulatory compliance for medical devices involves a meticulous approach to development, validation, and documentation. This is often achieved by implementing a quality management system and by establishing and maintaining a technical documentation throughout the lifecycle phases.

Implementing a Quality Management System (QMS) is essential for medical device manufacturers due to various regulatory, safety, and operational considerations. A QMS provides a structured framework for managing and continually improving the processes involved in the design, development, manufacturing, and distribution of medical devices. Manufacturers of AI systems should consider the newly published standard ISO/IEC 42001:2023.

Secondly, manufacturers of AI systems shall wherever possible resort for a hybrid approach for integrating requirements of ISO/IEC 62304 – Medical device software – Software lifecycle processes with ISO/IEC 5338 – AI system lifecycle processes. Furthermore, for AI systems based on machine learning, manufacturers of AI systems shall align the AI development practices with the recommendations of Good Machine Learning Practices (GMLP). Adhering to this approach should support manufacturers of AI systems to meet the expectations of the regulatory authorities and facilitate for market entry.

Thirdly, segregation of data for training and validation in healthcare machine learning models takes on heightened significance, particularly considering the necessity to validate models in clinical trials. Rigorous validation is crucial to ensure that the model’s performance is robust and clinically relevant. By separating training and validation datasets, the model undergoes assessment against diverse, unseen data, mirroring the conditions it would encounter in real-world clinical settings. This process not only guards against overfitting but also enhances the model’s generalisability and reliability in practical healthcare applications. The careful segregation of data for training and validation is thus an indispensable step in the development and validation of machine learning models that can meet the stringent standards of clinical practice and trials in the healthcare domain.

Another key consideration is the adequate definition of Performance parameters which are indispensable in evaluating the efficacy of machine learning algorithms based medical devices. These quantitative metrics, including accuracy, precision, repeatability, and others, form a critical foundation for assessing how well a model fulfils its intended use and associated claims. Clear and well-defined performance endpoints are necessary to guide the development, fine-tuning, and assessment of machine learning models. Safety considerations, such as identifying errors and false predictions, are paramount to ensuring the reliability and clinical suitability of these algorithms.

The aforementioned considerations, coupled with the diligent implementation of systematic monitoring approaches and the application of state-of-the-art concepts, collectively empower manufacturers of AI-enabled medical devices to align with regulatory expectations. This proactive approach not only ensures compliance but also facilitates the incorporation of state-of-the-art technologies, allowing medical device manufacturers to navigate the complex regulatory landscape effectively while delivering innovative and high-quality solutions.

Conclusion

In summary, the integration of Machine Learning (ML) in medical devices offers transformative benefits for healthcare, enhancing diagnostics, personalised treatment, and operational efficiency. Despite its potential, many challenges have to be addressed when integration ML in Medical devices such as technical, scientific and ethical constraints. The regulatory frameworks developed in the US and in the European Union provide general rules and principles that are designed to ensure the use of ML result in safe and effective products such as medical devices.

While ML offers a tremendous opportunity for innovation and growth for Medical Devices Manufacturers, the systematic applications of good practices in the development of state-of-the-art devices is a necessity to ensure that their products will achieve not only the regulatory expectations but also foster trust among healthcare professionals and patients which is crucial for the successful deployment of AI in healthcare.

Conflict of Interest

None.

References:

Strohm L, Hehakaya C, Ranschaert ER et al. (2020) Implementation of artificial intelligence (AI) applications in radiology: hindering and facilitating factors. Eur Radiol. 30(10):5525-5532.