HealthManagement, Volume 20 - Issue 8, 2020

Traditionally, the radiology community advocates peer review for quality assurance. The current trend is to focus more on peer learning, where learning from peers in a continuous improvement mode becomes more important than focusing on the (number of) diagnostic errors. Agfa HealthCare has developed a module that supports both peer review and peer learning in its Enterprise Imaging (EI) platform. HealthManagement.org spoke to Jan Kips and Danny Steels of Agfa HealthCare to learn more about this new module and how it can help facilitate learning in radiology.

Can you explain the peer learning feature and how it is relevant for the radiology environment?

Peer reviews are a fundamental part of the radiology workflow. They allow you to collect and evaluate data on reading errors and to meet your regulatory requirements. Diagnostic errors in radiology are - and have always been – a major concern. Research has indicated that every day, a radiologist commits 3 to 4 diagnostic errors (Bruno 2017) and diagnostic errors contribute to an alarming 10% of patient deaths in the U.S. (McMains 2016). This becomes even more important in the current trend of cross-site collaborations, where patient care more often depends on the performance of various radiology departments.

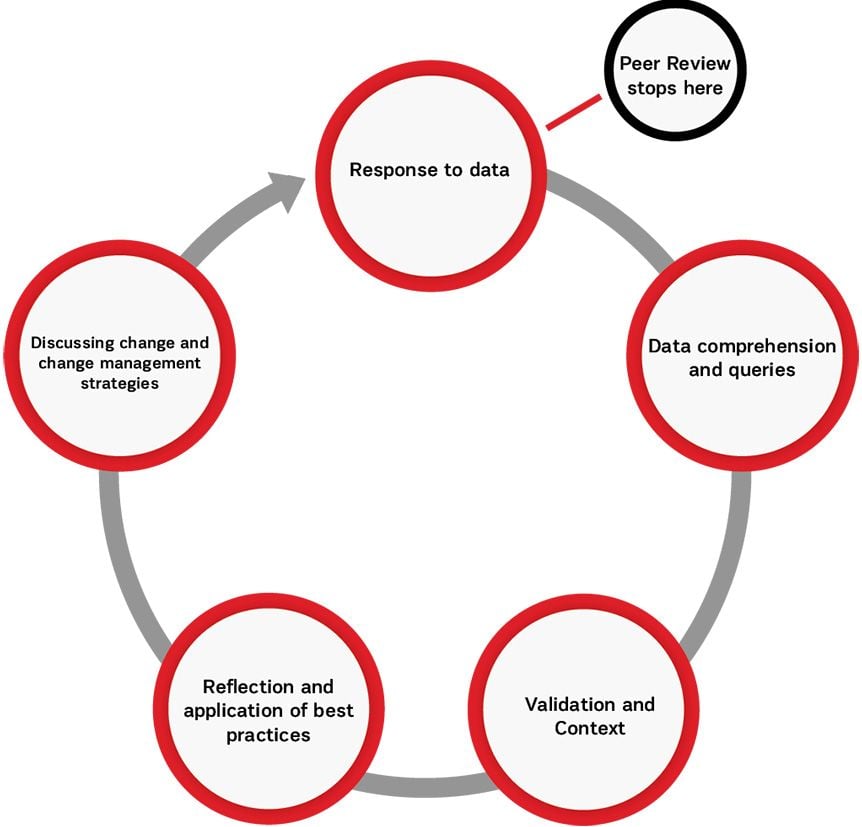

The traditional way to measure radiology performance has been through peer review, where radiologists evaluate and score their peer’s reports. However, while peer review focuses on how many errors were made, peer learning wants to focus on ‘how and why’ an error was made (Haas et al. 2019; Larson et al. 2017).

The concept of peer learning is gaining traction. Various hospitals in the U.S. are already using it, either on top of the traditional peer review or as a replacement. Participating radiologists report that peer learning helps to improve patient care more than traditional peer review, encourages more people to participate in the feedback process, and facilitates learning for everyone involved.

At Agfa HealthCare, we believe there is a clear value in having physicians trigger peer reviews themselves on studies that they come across as learning opportunities. A whole range of use cases/situations in which one could see a learning opportunity would simply be missed with traditional peer review. Listing a few:

• Users detect a learning opportunity while reading a study.

• Additional input from a clinician, multidisciplinary conference, laboratory or pathology result that alters the report conclusion and offers a learning opportunity.

• Peer learning case started as a result of a risk management meeting

Apart from focusing on the errors and negative feedback, there is now consensus that giving positive feedback is equally important. ‘Good calls’ provide important learning opportunities too. Both are being proposed by the regulatory bodies in the ACR and RCR.

The current peer review used in radiology departments has drawbacks. You say that Agfa has developed a “true peer learning” workflow. Why do you think your tool is better than other available options?

Although initiated with the best intentions, there are a number of drawbacks related to the traditional peer review. Randomly selecting studies for peer review leads to less learning opportunities. Generally, 20% of the studies present 80% of the learning opportunity. So by randomly creating cases, a lot of learning opportunity is missed. That is why many radiologists consider peer review a time-consuming activity that must be done for compliance reasons only. Also, the focus on the number of discrepant findings may lead to a blame culture, with typically little feedback to the report author.

Agfa’s peer learning module allows to address these shortcomings by:

• Offering the possibility to both automatically and manually trigger peer reviews.

• Fully embedding the peer review workflow in the radiology workflow in Enterprise Imaging.

• Anonymised, built-in feedback loops that allow authors to learn from the advice of colleagues.

• Dedicated conference functionality to discuss the case and ability to follow-up on recommendations or process changes.

• A highly configurable workflow, allowing customers to tailor their workflow from traditional peer review to a peer learning workflow with conferences and anything in between.

How does Agfa’s peer learning feature minimise the element of shame when identifying errors and/or mistakes? How does it offer a more positive approach?

It’s worth noting that changing the culture is primordial and perceived way more important and difficult than the right software implementation. That being said, there are a few particular features of Agfa’s peer learning module that can support this culture:

Anonymisation Mode

Both the patient and the original report author can be anonymised during the peer learning workflow. Privileged users can break the glass and overrule this anonymisation, e.g. in case there are serious consequences for the patient, and they need to be identified.

Note that in a true peer learning mindset, anonymisation is not required as there is no stigma on making errors. It’s all about learning from one’s mistakes and sharing these learning points. However, even in organisations where the peer learning mindset is present, there may be occasions in which anonymisation is desired. Think of a teaching session with students or a meeting with external participants. That’s why Agfa’s EI peer learning module also allows – in addition to the system-level anonymisation – to anonymise per meeting (conference).

Asking for Additional Information

Imagine you’re performing a peer review and lack some information in order to make a thorough assessment, such as the patient’s clinical history, which is not mentioned in the current nor prior reports. Through a dedicated ‘request feedback’ task, the reviewer can request this additional information, even without knowing who he is asking the question to (in case the workflow is anonymised).

Importance of Feedback

Feedback is very important for building an open culture and allowing original report authors to actually learn from the peer review. This feedback can be both positive (good calls) and negative (ideally with follow-up actions or constructive feedback).

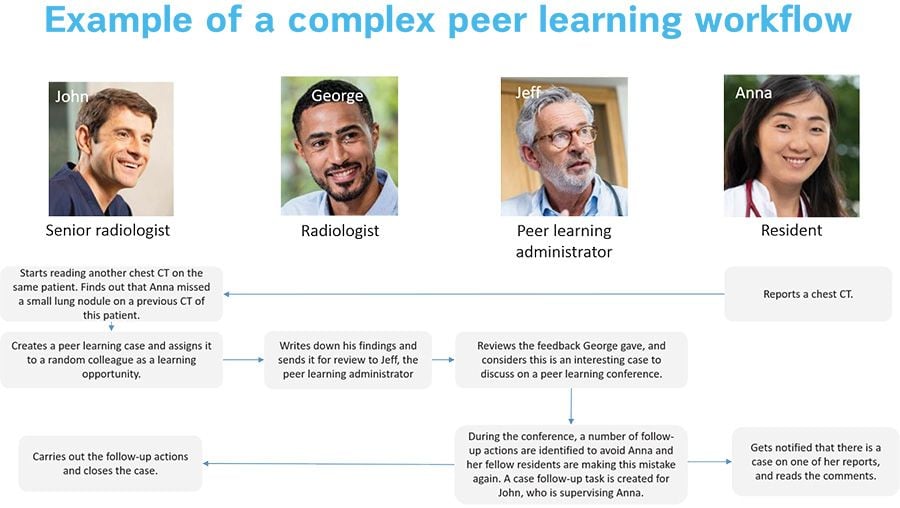

Peer Learning Administrator Role

The peer learning model allows the possibility to include a peer learning administrator. The key functions of a peer learning administrator include:

• First reviewer of the cases reported

• Reject cases when non-relevant

• Add missing/additional case data like patient history

• Review cases and rewrite the original feedback if needed to ensure that it is phrased in a constructive way

• Put cases on the agenda of conferences when needed, for example, a Quality Committee can discuss cases upfront before discussing them in a group

• Follow-up on actions to be taken or reopen cases when needed

Is the peer learning model easy to implement?

The workflow is very configurable, so an important part of the implementation is the workflow analysis. Similar as for many other workflows, we recommend to think big, but start small: the entire scope/vision of the department on peer learning should be known upfront, but implementation should be done stepwise to finetune where needed.

Is the peer learning module implementable for all types of facilities or only for larger hospitals or departments?

One of the key properties of the peer learning module is its configurability which makes it relevant for all types of facilities and departments. Workflows can easily be tuned from very simple (one reviewer, always triggered manually) to very complex (multiple review boards, manual & automatic creation of cases).

The software can also perfectly cater to the more traditional peer review workflows with only a random selection of cases and registering scores as the Radpeer score, or a combination of both.

The peer learning workflow is completely embedded in a radiologist’s routine workflow. Enterprise Imaging is a task-based system. The peer learning related tasks are just ‘one of them,’ and appear in the activities overviews. Depending on the user’s preference, there can be separate activities overviews and/or task lists for peer learning activities, or they can be merged with existing ones.

When a hospital or department implements this feature, do the radiologists lead it, or is there another committee or team that manages it?

That’s completely up to the hospital/department. Some organisations choose to appoint a peer learning administrator, who decides which cases get discussed in meetings or reviews the wording before the original report author gets his feedback. All data is available in the reporting module as well, allowing PACS admins to extract the reports needed for hospital management or accreditation and certification bodies.

The peer learning module can also be used for a second opinion workflow. Can you explain it a bit more?

Indeed, if you want a second opinion without having the second radiologist’s name on the report, you can trigger a peer learning case on your own report. That’s just one example on how this module can cover other use cases that are not strictly peer learning.

In summary, Agfa HealthCare’s peer learning system is designed to improve collaboration and foster a culture of teamwork and feedback which promotes actionable learning and would enable radiology departments to create a continuous improvement cycle. It’s learning at its best. That is our ultimate goal.

Ready to turn your radiology department into a continuous learning environment? Download the leaflet and start here.

Disclosure of conflict of interest: Point-of-View articles are the sole opinion of the author(s) and they are part of the HealthManagement.org Corporate Engagement or Educational Community Programme.

References:

Bruno M (2017) 256 Shades of gray: uncertainty and diagnostic error in radiology. Diagnosis. 4. 10.1515/dx-2017-0006.

Haas BM, Mogel GT, Attaya HN (2020) Peer learning on a shoe string: success of a distributive model for peer learning in a community radiology practice. Clin Imaging, 59(2):114-118. doi:10.1016/j.clinimag.2019.10.012

Larson DB, Donnelly LF, Podberesky DJ et al. (2017) Peer Feedback, Learning, and Improvement: Answering the Call of the Institute of Medicine Report on Diagnostic Error. Radiology, 283(1):231-241. doi:10.1148/radiol.2016161254